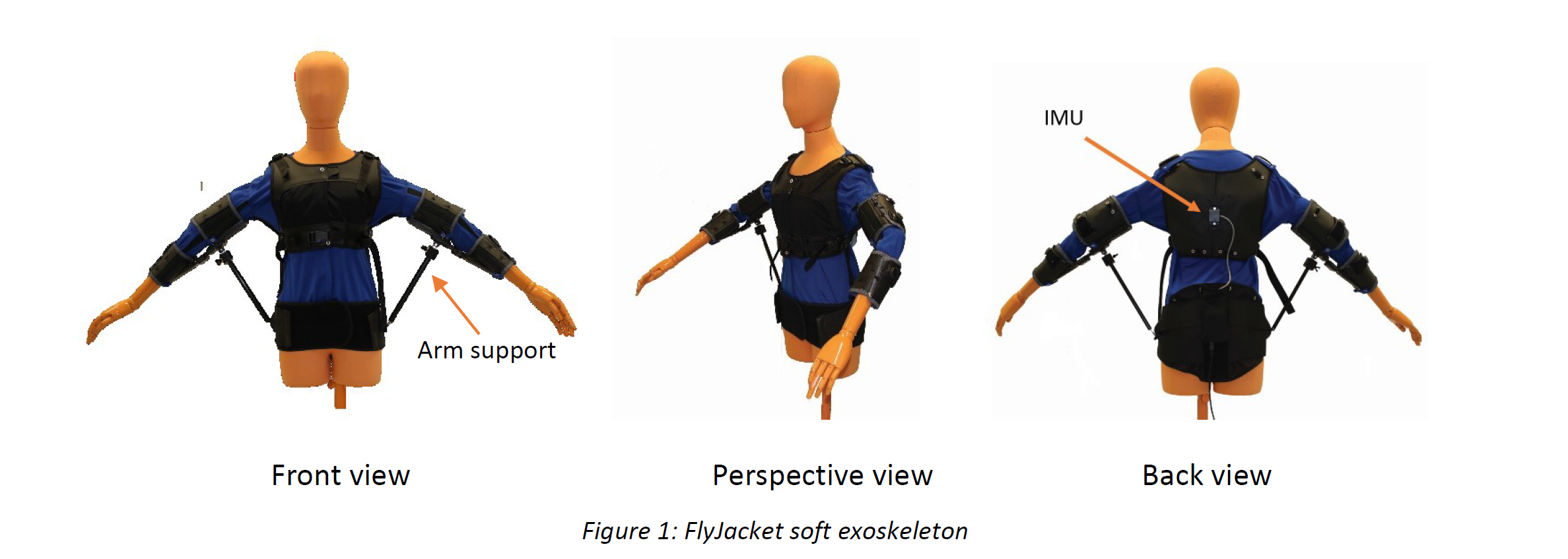

The Swiss National Center of Competence in Robotics (NCCR Robotics) is investigating more intuitive and natural ways to interact with drones. To reach this goal, we studied which body movements are the most intuitive to control drones (Miehlbradt et al.) and developed a soft upper limb exoskeleton called the FlyJacket (See Fig. 1 and Rognon et al.) which has embedded MTw Awinda wireless motion tracker (IMU) to record body movements and commands are then transmitted to the drone.

Enter the Human-robot interface

Robots are becoming pervasive in both domestic and professional environments. Consequently, there is a growing demand for new human-robot interfaces (HRIs) to control and interact with robots. However, current HRIs, such as joysticks, keyboards, and touch screens, are neither natural nor intuitive for the user as they require training and concentration during operation. In many cases, this limits the use of robots to highly trained professionals. Among the various types of robots, drones are probably those with the fastest growing in personal and professional environments because of their capability to extend human perception and range of action in unprecedented ways. Fields of application include aerial mapping, agriculture, military applications, search-and-rescue, transportation and delivery. In most of these fields, drones work cooperatively with users as they increase human space perception providing information that would not be available from a ground perspective. However, the use of joysticks or remote controllers for drone teleoperation is a non-intuitive and challenging task, which becomes cognitively demanding during long-term operations. The development of more intuitive control interfaces can improve flight efficiency, reduce errors, and allow users to shift their attention from the task of control to the evaluation of the information provided by the drone. Human-robot interfaces can be improved by focusing on natural human gestures captured by wearable sensors.

Body motion and measurements

Our study on naïve individuals showed that torso movements are a natural and immersive way of flying a fixed-wing drone (Miehlbradt et al.). We found that drone pitch can be intuitively controlled by bending the torso forward and backward in the sagittal plane and drone roll can be controlled by a combination of bending in the frontal plane and rotation along the longitudinal axis of the torso. Even if with this flight style the arms were not used to directly control the drone attitude, we observed that participants instinctively spread out their arms when flying. To tackle eventual arm fatigue due to this position, a passive arm support was integrated into the FlyJacket.

Torso movements are recorded with a MTw Awinda wireless motion tracker located in the middle of the back on a leather part reinforced with plastic. The steady connection offered by the inextensible leather element that supports this IMU enables precise tracking of torso motion without interference from motion of other body parts, such as the arms. For our first study on the FlyJacket (Rognon et al.), a simple flight style using only the torso to control a drone was used. However, other flight styles can be implemented and additional IMUs can be inserted on the inextensible element on the upper arms, forearms or pelvis to record the motion of these body parts.

Flyjacket

The FlyJacket has been tested for the teleoperation of a real drone (see Fig. 2). The flight was performed with a quadcopter mimicking the flight dynamic of a fixed-wing drone (see details of the control in Cherpillod et al.). The drone can stream real-time video feedback to the goggles of the user. The test simulates a search-and-rescue mission where the user operates a drone to geotag points of interest, for example injured people or dangerous areas. These points of interest will subsequently populate a map that can facilitate the planning of the intervention. In the test, the user was wearing the FlyJacket with the arm support and a smart glove capable to detect predefined finger gestures through capacitive sensors placed on each finger and on the palm. The trajectory of the drone can be followed on a computer. Red and green dots are points of interest set by the user during the flight. Different colors can be used to describe the nature of the recorded point, for example, in the simulated

rescue mission, green for injured people to be rescued and red for dangerous areas. The point of interest appears in the center of the field of view of the drone (white cross in Fig. 2b). The numbers above the recorded points indicate the estimated distance between the point and the drone in meters. Points of interests can be added and removed from the flight directly during the flight.

Acknowledgments

This work was supported by the Swiss National Science Foundation through the National Centre of

Competence in Research Robotics (NCCR Robotics).

References

- J. Miehlbradt, A. Cherpillod, S. Mintchev, M. Coscia, F. Artoni, D. Floreano, S. Micera, “A datadriven

body-to-machine interface for the effortless control of drones”, submitted for

publication.

- Rognon, C., Mintchev, S., Dell'Agnola, F., Cherpillod, A., Atienza, D., & Floreano, D. (2018).

FlyJacket: an upper-body soft exoskeleton for immersive drone control. IEEE Robotics and

Automation Letters.

- A. Cherpillod, S. Mintchev and D. Floreano, “Embodied Flight with a Drone”, 2017,

arXiv:1707.01788

Are you interested in our solutions? Please click on the button below to contact us.