When rescue service personnel go to the aid of people trapped in a burning or unsafe building, they are entering the unknown: they do not know what danger they will face, or where the obstacles stand between them and the people they have come to save. And in a fire or after an earthquake, first responders are often quickly disorientated inside a building.

Emergency services personnel, soldiers, and others could operate more quickly and safely if they had aid to navigation inside an unknown building. But indoors, GPS signals are unavailable or patchy. So how can people’s position be tracked indoors, in a tunnel or underground, in the absence of satellite navigation?

This was the challenge that the Canadian government asked a team of experts in the Embedded and Multi-sensor Systems Lab (EMSLab) at Ottawa’s Carleton University to solve. The system that they developed has a special motion tracker at its heart. Small in size but big in importance, the MTi-300 Attitude and Heading Reference System (AHRS) from Xsens provides a secure foundation for the operation of a sophisticated array of radio and optical sensors which build a 3D map of any building as the operator moves through it.

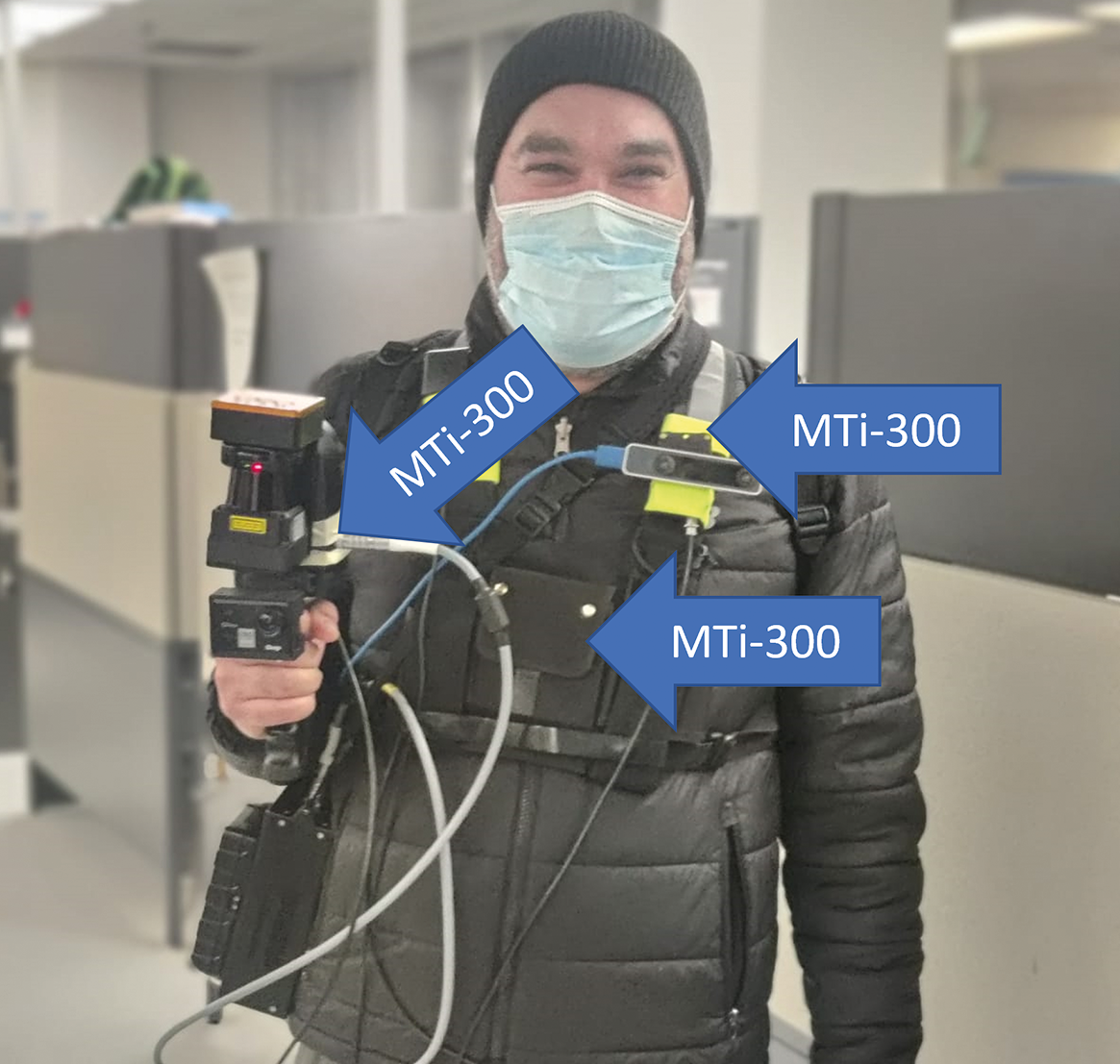

The EMSLab system’s camera, LiDAR sensor, and UWB receiver each use an MTi-300 AHRS to provide a consistent 3D frame of reference

The EMSLab system’s camera, LiDAR sensor, and UWB receiver each use an MTi-300 AHRS to provide a consistent 3D frame of reference

How to navigate without a map

The Canadian government’s specification for the Carleton University research project was as hard as it gets in the world of SLAM (Simultaneous Location and Mapping) technology: to precisely track a person’s motion through 3D space, in any indoor location, in real-time, with no map or plan available before entering the building.

To do this, the Carleton University research group developed portable apparatus to be carried by each person who is to be tracked. This apparatus consists of an array of radio, optical, and motion sensors working in concert. Project leader Mohamed Atia, Associate Professor in the Systems and Computer Engineering faculty at Carleton University, says, ‘To track the location of individuals in 3D space, we made a single, multi-sensor system which can be carried or worn by a person. The system we developed is comprised of a vision camera, a LiDAR laser sensor for ranging and object detection, and an ultra-wideband (UWB) receiver, which locates the individual in 3D space relative to UWB beacons dropped at intervals as the operator moves through the building.’

In combination, these technologies are capable of creating a 3D, multi-story map showing obstacles such as walls, windows, doors, and floors, and of positioning the carrier within the map. The way the system creates a 3D map in real-time can be seen in the video below.

The biggest technical difficulty in implementing this system is to combine the multiple sensor inputs – the process of sensor fusion. Since the Carleton University system is worn or carried, it is continually rising, falling, rotating, and tilting in 3D space as the operator walks, crawls, or runs. Uncorrected, this pitch, roll, and yaw movements make the LiDAR, camera, and UWB inputs inconsistent with each other, and inconsistent with their own outputs over time.

The solution: a stable, accurate 3D frame of reference. And this is provided by three MTi-300 AHRS units. An MTi-300 AHRS affixed to each of the LiDAR sensor, camera, and UWB receiver measures in millimetre-level detail every roll, pitch, and yaw displacement at each sensor, enabling real-time continuous compensation of the sensor’s outputs. This means that they can be fed into a map that is always flat relative to the earth’s surface, no matter what position the carrier’s body is in when each sensor measurement is taken.

Mohamed Atia says, ‘The crucial requirements for the AHRS were accuracy and stability. Even tiny errors in the 3D frame of reference provided by the AHRS are rapidly amplified over the course of a mission, so we needed our chosen AHRS to be accurate, and to maintain its accuracy over time and temperature with almost zero drift. To fit the portable nature of the apparatus, it also needed to be small and light.

‘We studied the market for commercial IMUs carefully, and our evaluation showed that the MTi-300 sensor from Xsens offered the best combination of high performance and small size.’

The Carleton University system has one other clever trick up its sleeve: users wear the MTw Awinda wireless motion capture system, also from Xsens, which allows the system to recognize types of motion, such as motion and crawling, and to perform dead reckoning in the temporary absence of UWB signals. Mohamed Atia says that wireless technology is an important feature of the Awinda product because it makes the SLAM system more easily wearable. He adds, ‘The MTw Awinda system also performs synchronized data sampling accurate to ±10µs, which gives us a time reference for the fusion of all the other sensors in the system.’

A life-saving innovation

Early field trials of the portable Carleton University system prove in principle that accurate, reliable SLAM can be implemented in any indoor 3D space without satellite positioning signals. Mohamed Atia’s hope is that, after further refinement, this technology will one day help save lives. ‘In the military, industrial or emergency services settings, the ability to know exactly where personnel is inside a building could be a game-changer,’ he says. ‘When a first responder is injured or calls for assistance, colleagues will know exactly where to go, and so personnel can be deployed precisely where and when they are needed. In stressful environments such as a building fire or the battlefield, speed saves lives. Our technology, with the MTi-300 at its heart, will enable people to be found and helped more quickly.’

About MTi-300 AHRS

Would you like to know more about the Xsens MTi-300 AHRS? Please visit the product page.